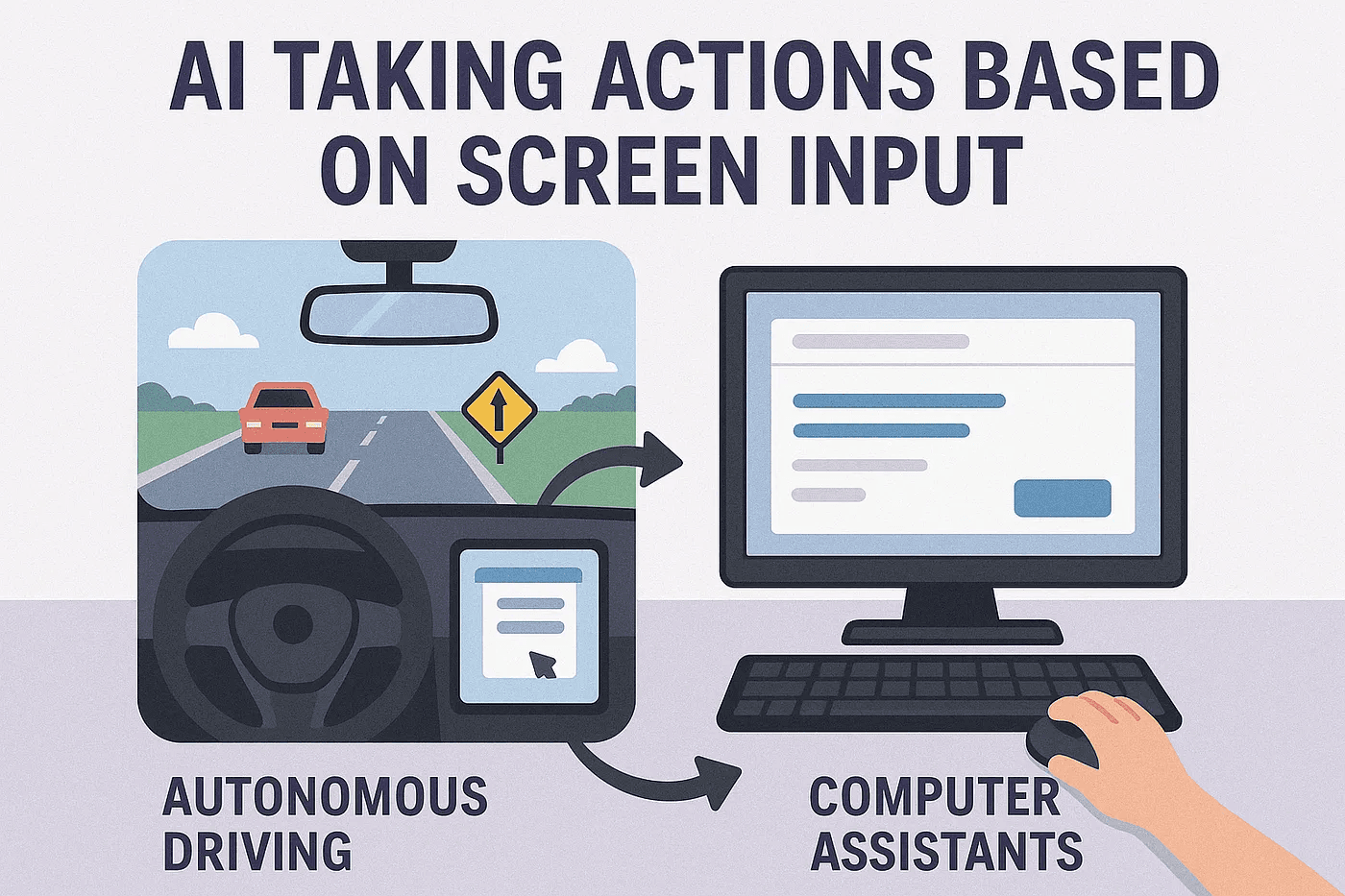

Autonomous cars have come a long way. They can now drive on highways, stop for pedestrians, and make decisions quickly based on what they “see” through cameras and sensors. This amazing progress shows how powerful AI can be when it uses visual input to act in the real world. But there’s another exciting use for this kind of AI: teaching it to use computers like we do.

Imagine an AI that can look at a computer screen, figure out what’s happening, and then click buttons, type words, scroll, or drag things around — just like a human. This new technology is called a computer use agent. And it works in a way that’s very similar to self-driving cars.

The Similarities Are Clear

Self-driving cars look at the road and decide how to drive: whether to turn, speed up, slow down, or stop. In the same way, computer use agents look at a screen and decide how to interact with it: where to click, what to type, or how to move the mouse.

In both cases, the AI needs to:

- See what’s happening (visual input)

- Understand the situation (context)

- Decide what to do next (action plan)

- Do it (take the action)

This cycle of seeing, understanding, deciding, and acting is what makes both self-driving cars and computer use agents work.

Why Computer Use Agents Matter

Most of today’s work happens on computers. People fill out forms, send emails, copy and paste data, and use all kinds of software. But these tasks can be repetitive and time-consuming. What if we could train AI to do them for us?

That’s what computer use agents can do. They can:

- Help with customer service by navigating systems like a human

- Handle data entry by copying info between programs

- Perform software testing by acting like a real user

- Improve accessibility by helping people with disabilities use computers more easily

And the best part? They don’t need special access to the software. They just need to “see” the screen, like a person would.

Why This Is Happening Now

Thanks to recent breakthroughs in AI, especially in computer vision and machine learning, we now have the tools to train these smart agents. Models can learn from watching people use computers and then do the same things themselves. They can even handle new or unfamiliar software, just like how a self-driving car can handle a new road.

Just like self-driving cars had to learn to deal with unexpected situations, computer use agents are now being tested on how well they can adapt to different apps and websites.

Looking Ahead

The future is bright. The lessons from self-driving technology can help improve computer use agents, and vice versa. In time, these digital agents may become a normal part of how we work and live — quietly handling tasks in the background, saving us time and effort.

Whether it’s driving a car or using a computer, AI is learning to do more by simply watching and acting. We’re entering a new era of smart assistance.

Welcome to the next step in AI: teaching it to use screens as we do.